Research Methods and Methodology

| Site: | Newgate University Minna - Elearning Platform |

| Course: | Research Methodology & Proposal Writing |

| Book: | Research Methods and Methodology |

| Printed by: | Guest user |

| Date: | Saturday, 24 January 2026, 2:20 PM |

Description

In the context of academic and scientific research, research methods and research methodology are two terms that are often used interchangeably but have distinct meanings.

Together, methods and methodology provide the foundation for designing and executing a research study, ensuring that the study is both rigorous and systematic, while appropriately addressing the research questions posed.

This module will explain each concept in detail, highlight their differences, applications, and discuss how they work together in the research process.

1. Research Method

Research methods refer to the specific techniques or procedures used to collect and analyze data to achieve a given objective during a research study.

These are the tools or procedures employed by researchers to answer their research questions, test hypotheses, or explore a specific problem.

Types of Research Methods:

- Qualitative Research Methods:

- Common qualitative methods include:

- Interviews (structured, semi-structured, or unstructured)

- Focus Groups: Discussions with a small group of people to gather opinions or insights.

- Case Studies: In-depth investigations of a single case or a few cases.

- Ethnography: Immersive observation of a particular community or culture.

- Content Analysis: Analyzing text, media, or other content for patterns or themes.

- Quantitative Research Methods:

- Common quantitative methods include:

- Surveys: Questionnaires with closed-ended questions to collect data from large samples.

- Experiments: Controlled studies that manipulate variables to observe effects.

- Observations: Structured data collection where behaviors or phenomena are counted or measured.

- Longitudinal Studies: Studies that follow the same subjects over a period of time to observe changes.

- Mixed Methods:

- Example: A researcher might use qualitative interviews to explore participants' experiences and then use quantitative surveys to measure the frequency or prevalence of those experiences across a larger sample.

2. Research Methodology

- Research methodology refers to the philosophical framework or systematic approach that guides how a researcher conducts their research.

- It is the overarching strategy that determines the research process, the approach to data collection and analysis, and the rationale behind the selection of specific methods.

Key Elements of Research Methodology:

1. Philosophical Approach (Epistemology):

- Research methodology involves the philosophical stance behind the research. For instance, it answers questions such as:

- How do we know what we know?

- What is the nature of knowledge (ontology)?

- How is knowledge acquired?

- Common research philosophies include:

- Positivism: Belief in an objective reality that can be measured and observed. Researchers following this approach often use quantitative methods.

- Interpretivism: Focuses on understanding human experiences and meanings. This approach often aligns with qualitative research methods.

- Pragmatism: Advocates for using whatever methods are most practical and effective to answer the research question, often leading to mixed methods.

- Constructivism: Emphasizes that knowledge is socially constructed, and researchers should understand participants' perspectives.

2. Research Design:

- The design of a study outlines how the research is organized and structured. It is the framework that guides the collection, measurement, and analysis of data.

- Types of research designs include:

- Descriptive Research: Aims to describe the characteristics of a phenomenon.

- Exploratory Research: Seeks to explore new topics or gain a deeper understanding of a problem.

- Experimental Research: Involves manipulating variables to determine causal relationships.

- Correlational Research: Examines the relationship between two or more variables without manipulation.

3. Sampling Strategy:

- Methodology also involves determining how participants or data sources will be selected. This includes whether a study will use a random sample, purposive sample, or convenience sample, among other strategies.

4. Data Collection Methods:

- While research methods refer to the specific techniques, the methodology refers to how those methods fit into the broader approach. For example, a qualitative methodology might use interviews or focus groups as research methods, and a quantitative methodology might use surveys or experiments.

5. Data Analysis Techniques:

- The methodology also includes how data will be analyzed. Qualitative analysis might involve thematic coding, grounded theory, or content analysis, while quantitative analysis might involve statistical techniques like regression, correlation, or hypothesis testing.

3. How Research Methods and Methodology Work Together

Research methods and methodology work in tandem to form the foundation of the entire research process. Here’s how they interact:

1. Theoretical Framework: The methodology provides the philosophical and theoretical basis for the research, guiding the researcher in choosing appropriate methods. For instance, a positivist methodology may guide a researcher to use quantitative methods like surveys or experiments to test hypotheses.

2. Alignment: The methods chosen (e.g., surveys, interviews, experiments) must align with the methodology. For example, a qualitative methodology would typically involve methods such as interviews or focus groups, whereas a quantitative methodology might involve surveys or statistical analysis.

3. Coherent Research Design: The methodology guides the overall research design (e.g., whether the research will be exploratory, descriptive, or experimental), and the methods determine how data will be collected to answer the research questions posed within the design.

Examples of How Methods and Methodology Interact:

1. Qualitative Methodology:

- Methodology: Interpretivism (understanding the meanings behind human behavior).

- Methods: Semi-structured interviews, thematic analysis.

- The researcher might aim to explore how people interpret their experiences with a social phenomenon (e.g., the effects of remote work on employee well-being).

2. Quantitative Methodology:

- Methodology: Positivism (belief in objective measurement of reality).

- Methods: Surveys, experiments, statistical analysis.

- The researcher might measure the impact of remote work on employee productivity through a survey with a large sample size and analyze the data using statistical tests.

3. Mixed Methods:

- Methodology: Pragmatism (using both qualitative and quantitative methods to answer a research question).

- Methods: Qualitative interviews to explore perceptions of remote work and a quantitative survey to measure its impact on productivity.

- The researcher could use qualitative methods to explore in-depth personal experiences and quantitative methods to generalize findings to a larger population.

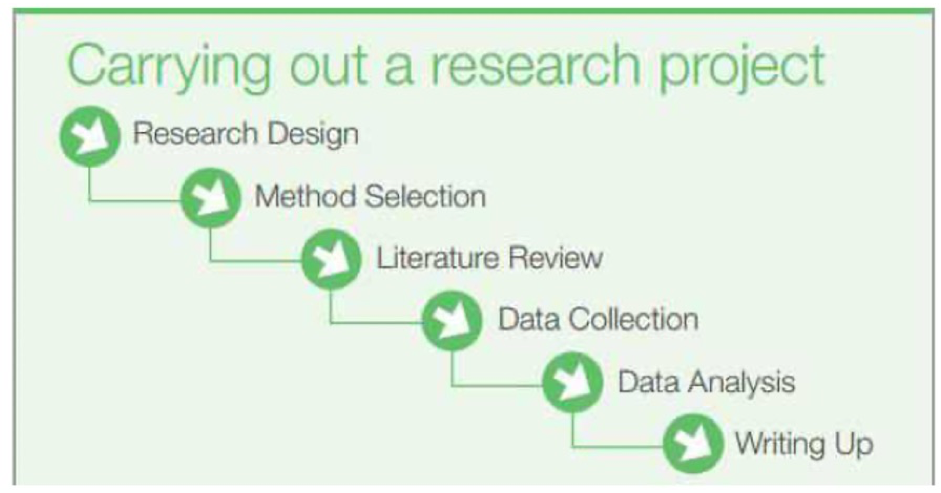

4. The Research Process: Steps Involved in Conducting Research

- The research process is a structured and systematic sequence of steps that guide researchers from the inception of an idea to the conclusion of a research study.

- While the exact process can vary depending on the field of study, the research question, and the methodology chosen, there are common stages that apply to most research endeavors.

- This Unit will discuss the key steps involved in conducting research. By following these steps, researchers ensure that their study is thorough, valid, and reliable, leading to meaningful contributions to knowledge in their field.

1. Identifying and Defining the Research Problem

Objective: To clearly understand the issue or question you want to investigate.

- Problem Identification: Start by identifying a research problem or topic of interest. This could come from gaps in existing knowledge, real-world issues, or personal curiosity.

- Literature Review: Conduct a preliminary literature review to understand what has already been researched on the topic. This helps you frame your problem more precisely and avoid duplication.

- Research Questions: Define specific, clear, and focused research questions or hypotheses. These questions should be answerable through research.

Example:

If you’re interested in education, your research problem might be: "How

does online learning impact student performance in high school?"

2. Reviewing the Literature

Objective: To gain a thorough understanding of existing knowledge related to the research topic and identify gaps.

- Comprehensive Review: Conduct a detailed literature review to identify previous studies, theories, and findings. This helps you see where your research fits in the broader academic conversation.

- Identify Gaps: Based on the literature, determine areas where knowledge is lacking, or new questions have emerged. This is where your research will make a contribution.

- Theoretical Framework: In some cases, the literature review also helps you identify theories that will frame your research methodology and analysis.

Example:

You may find that most research focuses on college students’ performance in

online learning but very little has been done on high school students, creating

a gap in the literature.

3. Formulating the Research Hypothesis or Objective

Objective: To clearly state what you aim to prove or explore.

- Hypothesis Formation: If conducting hypothesis-driven research (especially in quantitative studies), you will formulate a hypothesis, which is a testable prediction about the relationship between variables.

- Example: “Online learning will improve high school students' performance in mathematics compared to traditional classroom learning.”

- Research Objective: In some cases, especially in exploratory or qualitative research, you may state the research objective instead, such as “To explore the experiences of high school students with online learning.”

4. Designing the Research Methodology

Objective: To plan the methods and procedures for data collection and analysis.

- Choose Methodology: Decide on the approach you will take, such as qualitative, quantitative, or mixed methods.

- Design Research Methods: Select specific methods for data collection (e.g., surveys, interviews, experiments, observations) based on your research objectives and hypothesis.

- Sampling: Decide how you will select participants or data points (e.g., random sampling, purposive sampling, convenience sampling).

- Operationalization: Determine how you will measure the variables you're studying. For example, how will you define and measure “student performance” in your study?

Example:

For the online learning study, you might use surveys to gather

students' perceptions, test scores to measure performance, and

select a sample of high school students from several schools.

5. Collecting Data

Objective: To gather the necessary information to answer the research questions or test the hypothesis.

- Data Collection Methods: Implement the research methods chosen in the previous step. For instance, distribute surveys, conduct interviews, or run experiments.

- Pilot Testing: In some cases, it’s advisable to test your data collection methods on a smaller sample before fully rolling them out (e.g., pilot surveys or trial runs for experiments).

- Ethical Considerations: Ensure that you obtain the necessary approvals (e.g., ethical review boards) and informed consent if dealing with human participants. Safeguard participants' rights and privacy.

Example:

You conduct an online survey of 500 high school students, asking them about

their experiences with online learning, their study habits, and their

performance on standardized tests.

6. Analyzing the Data

Objective: To process and interpret the collected data to derive meaningful results.

- Data Cleaning: Before analysis, ensure that the data is clean and free of errors. This includes removing incomplete or inconsistent responses or correcting outliers.

- Statistical Analysis (for Quantitative Data): If you have quantitative data, use statistical tools (e.g., SPSS, R, Excel) to perform tests such as correlation, regression, ANOVA, etc.

- Qualitative Analysis: If you're dealing with qualitative data (e.g., interviews or open-ended survey responses), use methods like thematic analysis, grounded theory, or content analysis to identify patterns and themes.

- Interpretation: Draw conclusions based on your analysis. Does the data support your hypothesis, or does it reveal something new?

Example:

You might use statistical tests to determine if there is a significant

difference in the performance of students who took online courses versus those

who took traditional courses. For qualitative responses, you may analyze the

students' feedback on their experiences.

7. Drawing Conclusions

Objective: To summarize the findings and discuss their implications.

- Interpret Results: Summarize the key findings from your data analysis. How do they relate to the original research questions or hypotheses?

- Limitations: Acknowledge any limitations in your study, such as sample size, data collection constraints, or potential biases.

- Implications: Discuss the broader implications of your findings. What do they mean for theory, practice, or policy? Do they confirm, challenge, or extend previous research?

Example:

You conclude that online learning did improve performance in mathematics for

high school students, but that the improvement was smaller than expected. You

also note that students’ engagement levels played a critical role.

8. Writing the Research Report or Thesis

Objective: To communicate your findings in a clear, structured manner.

- Introduction: Briefly introduce the research problem, objectives, and hypotheses. State the significance of the study.

- Literature Review: Summarize relevant research and theories that frame your study.

- Methodology: Describe the research design, sampling methods, and tools used for data collection and analysis.

- Results: Present the findings in a clear, systematic manner. Use tables, charts, and graphs to support your findings.

- Discussion: Interpret the results, link them to your hypotheses, and explore their implications.

- Conclusion: Summarize the research and suggest areas for further study.

Example:

In your research report, you might include tables that compare student test

scores, figures summarizing survey results, and a discussion of how your

findings relate to previous studies on online learning.

9. Revising and Finalizing the Report

Objective: To ensure clarity, coherence, and accuracy in the final research document.

- Editing: Carefully proofread the report for grammar, spelling, and clarity. Ensure that your arguments are logically organized and easy to follow.

- Feedback: Seek feedback from peers, advisors, or colleagues who can provide suggestions or critiques to improve the report.

- Final Submission: Submit your research paper, thesis, or dissertation according to the guidelines of the journal, institution, or publisher you’re working with.

10. Presenting and Disseminating the Findings

Objective: To share your research findings with a wider audience.

- Academic Journals: Submit your findings to peer-reviewed journals for publication.

- Conferences: Present your research at academic or professional conferences. You may deliver oral presentations, poster presentations, or workshops.

- Public Communication: Depending on the significance of your research, you may communicate your findings to the public through press releases, blogs, or social media.

Example:

You submit your findings to an educational journal and present a poster at a

conference on educational technology.

5. Criteria for Good Research

- Good research is guided by certain standards and criteria that ensure its credibility, accuracy, and impact.

- These standards help researchers to produce results that are trustworthy, reproducible, and respectful of the participants and community.

- The three major criteria for evaluating the quality of research are validity, reliability, and ethical considerations.

- These three pillars ensure that the research findings are both accurate and trustworthy, and that the study respects the rights and dignity of participants. By adhering to these criteria:

- Validity ensures the research is measuring what it is supposed to measure.

- Reliability guarantees that the findings are consistent and reproducible.

- Ethical considerations ensure that the research process adheres to moral guidelines and protects participants.

Together, these criteria help produce research that not only contributes to knowledge but does so in a responsible, meaningful, and impactful way.

1. Validity in Research

Definition:

Validity refers to the degree to which a research study measures what it

intends to measure. It reflects the accuracy and truthfulness of

the results. In other words, a study is valid if its findings can be

confidently interpreted as answering the research question or testing the

hypothesis.

Types of Validity:

- Internal Validity:

- This type of validity focuses on whether the results of the study are directly due to the variables being studied, rather than other external factors.

- Threats to Internal Validity: Confounding variables, bias in sampling, experimenter effects, or flaws in the research design (e.g., lack of control group).

- Example: In an experiment testing the effect of online learning on student performance, internal validity would be compromised if the groups were not comparable at the start of the study (e.g., if one group was more motivated than the other).

- External Validity (Generalizability):

- This refers to the extent to which the findings of the study can be applied to real-world settings or to populations outside the study sample.

- External validity is important because researchers want to know if their findings can apply to other situations, environments, or groups.

- Example: If an experiment on the effect of online learning on high school students was conducted only with a small group of students from a specific region, the findings may not apply to all high school students nationwide.

- Construct Validity:

- Construct validity refers to whether the research accurately measures the concept it intends to measure. It ensures that the operational definitions of the variables are consistent with their theoretical definitions.

- Example: If a study on "student motivation" measures it only by GPA, it may suffer from low construct validity because GPA is just one aspect of motivation, not a comprehensive measure.

- Criterion-Related Validity:

- This type of validity assesses how well one measure predicts an outcome based on another measure. It's often used in testing the accuracy of diagnostic tools or instruments.

- Example: The validity of a standardized test (e.g., SAT) can be assessed by how well it predicts college performance.

Ensuring Validity:

- Clear and precise operational definitions.

- Use of appropriate control groups or comparisons.

- Careful attention to sample selection and matching groups.

- Appropriate research design (e.g., randomized controlled trials for causality).

2. Reliability in Research

Definition:

Reliability refers to the consistency and stability of the measurement

process. A research instrument or tool is considered reliable if it

produces consistent results over time or across different situations.

Types of Reliability:

- Test-Retest Reliability:

- This type assesses the consistency of a measure when it is applied to the same group of individuals at different times.

- Example: If you administer a questionnaire on student attitudes toward online learning at two different times and get similar results, the test has good test-retest reliability.

- Inter-Rater Reliability:

- This refers to the degree to which different observers or raters agree on the measurement of a particular variable or behavior.

- Example: If two researchers independently code interview transcripts and agree on the classification of themes, the coding process has good inter-rater reliability.

- Internal Consistency (Cronbach's Alpha):

- Internal consistency refers to the degree to which items on a test or scale measure the same construct and produce consistent results.

- Example: A scale measuring "student satisfaction" should have items that consistently reflect this concept. If some items are unrelated to the construct, internal consistency will be low.

- Parallel-Forms Reliability:

- This type of reliability involves comparing two different forms of the same test or measure to see if they yield consistent results.

- Example: If two different versions of a questionnaire (measuring student attitudes toward a topic) produce similar results, the forms are considered reliable.

Ensuring Reliability:

- Clear operational definitions of variables.

- Use of standardized instruments or procedures.

- Training researchers and raters to ensure consistency.

- Repeated measurements or data collection points.

3. Ethical Considerations in Research

Definition:

Ethical considerations refer to the moral principles that guide the

conduct of research. Ethical research ensures that participants are treated

with respect and that their rights are protected throughout the study. Ethical

guidelines also ensure the integrity of the research process.

Key Ethical Principles in Research:

- Informed Consent:

- Participants must be fully informed about the nature of the research, the procedures involved, and any risks they may face. They must voluntarily agree to participate without any coercion.

- Example: Before conducting an interview or survey, the researcher should explain the study’s purpose, the time commitment required, and how the data will be used, ensuring that participants sign a consent form.

- Confidentiality and Privacy:

- Researchers must ensure that participants' personal information and data are kept confidential and only used for research purposes. If possible, data should be anonymized.

- Example: In a study involving student performance, names and other identifying details should not be included in the final report, and responses should be coded to protect identity.

- Protection from Harm:

- Research must avoid causing any physical, psychological, or emotional harm to participants. If risks are present, they must be minimized or managed.

- Example: If a study on mental health involves discussing sensitive issues, the researcher should provide participants with information about support resources if they experience distress.

- Voluntary Participation:

- Participants must be free to participate or withdraw from the study at any point without penalty or negative consequences.

- Example: If participants feel uncomfortable during the research process, they should be able to withdraw their data without any repercussions.

- Deception and Debriefing:

- If deception is necessary for the study (e.g., withholding certain information to prevent biasing results), researchers must justify the necessity of deception. Afterward, participants should be fully debriefed about the true nature of the study.

- Example: In a psychological experiment involving group behavior, participants might be told that they are involved in a study about social interactions, not about competition, to avoid biasing their actions. After the study, they must be informed about the actual research aims.

- Fairness and Equity:

- Researchers must ensure that all participants are treated equally and fairly. This includes providing equal opportunities for participation, especially in vulnerable groups, and avoiding exploitation.

- Example: If a research study involves a vulnerable group, such as children or economically disadvantaged people, the researcher should ensure that participation is voluntary and that these groups are not unduly exploited.

- Integrity and Honesty:

- Researchers must conduct their work honestly, avoiding any form of plagiarism, fabrication of data, or misrepresentation of results. The results should be reported accurately, whether they support the hypothesis or not.

- Example: If a researcher finds no significant effect of online learning on performance, they must report this truthfully rather than manipulating the data to show a positive outcome.

Ensuring Ethical Research:

- Obtain approval from an institutional review board (IRB) or ethics committee before starting the research.

- Use standardized consent forms and explain potential risks and benefits to participants.

- Be transparent about the study’s goals and procedures.

- Ensure that participants' privacy is maintained, and data is securely stored.

- Respect participants' autonomy and dignity throughout the research process.

6. Scientific Investigation Principles

- In scientific research, the principles of objectivity, control, and observation are foundational to ensuring that investigations are rigorous, reliable, and valid.

-

- Objectivity helps researchers avoid bias, ensuring their findings are based on evidence rather than personal beliefs.

- Control ensures that the research accurately measures the effects of the independent variable without interference from confounding factors.

- Observation forms the empirical foundation of scientific inquiry, allowing researchers to gather data and test hypotheses through careful, structured methods.

- They form the backbone of the scientific method, helping researchers generate conclusions that are grounded in empirical evidence.

By adhering to these principles, researchers ensure that their studies are scientifically rigorous, valid, and valuable contributions to knowledge.